The Bias-Variance Trade-Off

The bias-variance trade-off states that the squared error of a learning algorithm can be partitioned into three components:

1. Bias: The bias is the error caused by the simplifying assumptions in the model, which causes certain test instances to have consistent errors across different choices of training data sets. Even if the model has access to an infinite source of training data, the bias cannot be removed. For example, in the case of Figure 4.2, the linear model has a higher model bias than the polynomial model, because it can never fit the (slightly curved) data distribution exactly, no matter how much data is available. The prediction of a particular out-of-sample test instance at x = 2 will always have an error in a particular direction when using a linear model for any choice of training sample. If we assume that the linear and curved lines in the top left of Figure 4.2 were estimated using an infinite amount of data, then the difference between the two at any particular values of x is the bias. An example of the bias at x = 2 is shown in Figure 4.2.

2. Variance: Variance is caused by the inability to learn all the parameters of the model in a statistically robust way, especially when the data is limited and the model tends to have a larger number of parameters. The presence of higher variance is manifested by overfitting to the specific training data set at hand. Therefore, if different choices of training data sets are used, different predictions will be provided for the same test instance. Note that the linear prediction provides similar predictions at x = 2 in Figure 4.2, whereas the predictions of the polynomial model vary widely over different choices of training instances. In many cases, the widely inconsistent predictions at x = 2 are wildly incorrect predictions, which is a manifestation of model variance. Therefore, the polynomial predictor has a higher variance than the linear predictor in Figure 4.2.

3. Noise: The noise is caused by the inherent error in the data. For example, all data points in the scatter plot vary from the true model in the upper-left corner of Fig ure 4.2. If there had been no noise, all points in the scatter plot would overlap with the curved line representing the true model.

Formal View

We assume that the base distribution from which the training data set is generated is denoted by $B$. One can generate a data set $D$ from this base distribution:

$D ∼ B$

One could draw the training data in many different ways, such as selecting only data sets of a particular size. For now, assume that we have some well-defined generative process according to which training data sets are drawn from $B$.

Access to the base distribution $B$ is equivalent to having access to an infinite resource of training data, because one can use the base distribution an unlimited number of times to generate training data sets. In practice, such base distributions (i.e., infinite resources of data) are not available. As a practical matter, an analyst uses some data collection mechanism to collect only one finite instance of $D$.

Now imagine that the analyst had a set of $t$ test instances in $d$ dimensions, denoted by $\overline{Z_1} . . .\overline{Z_t}$. The dependent variables of these test instances are denoted by $y_1 . . . y_t$. For clarity in discussion, let us assume that the test instances and their dependent variables were also generated from the same base distribution $B$ by a third party, but the analyst was provided access only to the feature representations $\overline{Z_1} . . .\overline{Z_t}$, and no access to the dependent variables $y_1 . . . y_t$. Therefore, the analyst is tasked with job of using the single finite instance of the training data set $D$ in order to predict the dependent variables of $\overline{Z_1} . . .\overline{Z_t}$.Now assume that the relationship between the dependent variable $y_i$ and its feature representation $\overline{Z_i}$ is defined by the unknown function $f(·)$ as follows:

$y_i=f(\overline{Z_i})+\epsilon_i$

Here, the notation $\epsilon_i$ denotes the intrinsic noise, which is independent of the model being used. The value of $\epsilon_i$ might be positive or negative, although it is assumed that $E[\epsilon_i] = 0$. If the analyst knew what the function $f(·)$ corresponding to this relationship was, then they could simply apply the function to each test point $Z_i$ in order to approximate the dependent variable $y_i$, with the only remaining uncertainty being caused by the intrinsic noise.The problem is that the analyst does not know what the function $f(·)$ is in practice.Note that this function is used within the generative process of the base distribution $B$, and the entire generating process is like an oracle that is unavailable to the analyst. The analyst only has examples of the input and output of this function. Clearly, the analyst would need to develop some type of model $g(Z_i,D)$ using the training data in order to approximate this function in a data-driven way.

$\hat{y}=g(\overline{Z_i},D)$

the variable $\hat{y_i}$ to indicate that it is a predicted value by a specific algorithm rather than the observed (true) value of $y_i$.

All prediction functions of learning models (including neural networks) are examples of the estimated function $g(·, ·)$. Some algorithms (such as linear regression and perceptrons) can even be expressed in a concise and understandable way:

Most neural networks are expressed algorithmically as compositions of multiple functions computed at different nodes. The choice of computational function includes the effect of its specific parameter setting, such as the coefficient vector W in a perceptron. Neural networks with a larger number of units will require more parameters to fully learn the function. This is where the variance in predictions arises on the same test instance; a model with a large parameter set W will learn very different values of these parameters, when a different choice of the training data set is used. Consequently, the prediction of the same test instance will also be very different for different training data sets. These inconsistencies add to the error, as illustrated in Figure 4.2.

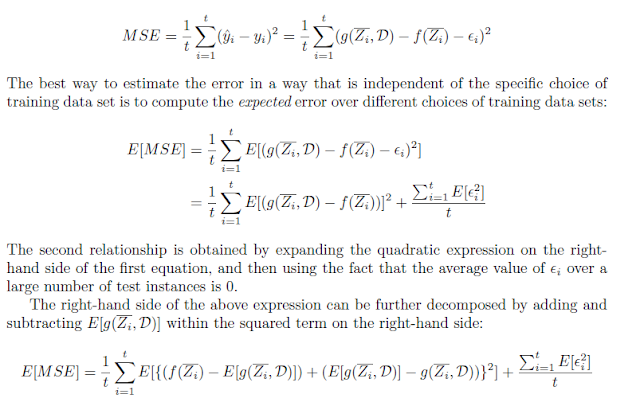

The goal of the bias-variance trade-off is to quantify the expected error of the learning algorithm in terms of its bias, variance, and the (data-specific) noise. For generality in discussion, we assume a numeric form of the target variable, so that the error can be intuitively quantified by the mean-squared error between the predicted values $\hat{y_i}$ and the observed values $y_i$. This is a natural form of error quantification in regression, although one can also use it in classification in terms of probabilistic predictions of test instances. The mean squared error, MSE, of the learning algorithm $g(·,D)$ is defined over the set of test instances $\overline{Z_1} . . .\overline{Z_t}$ as follows:

In other words, the squared error can be decomposed into the (squared) bias, variance, and noise. The variance is the key term that prevents neural networks from generalizing. In general, the variance will be higher for neural networks that have a large number of parameters. On the other hand, too few model parameters can cause bias because there are not sufficient degrees of freedom to model the complexities of the data distribution.This trade-off between bias and variance with increasing model complexity is illustrated in Figure below. Clearly, there is a point of optimal model complexity where the performance

is optimized. Furthermore, paucity of training data will increase variance. However, careful choice of design can reduce overfitting. This chapter will discuss several such choices.

Comments

Post a Comment